Verifying AI-generated code: Manage Code Like You Manage Infrastructure

Verifying AI-generated code: Manage Code Like You Manage Infrastructure

Software systems have always required verification. Not just “does it run?” but a whole set of questions that teams need to answer before they can trust what they’re shipping.

The processes we’ve built over decades such as test requirements, code reviews, agile ceremonies, documentation, even demos, exist to answer these questions. Most of these are still important, if not MORE important, when shipping AI-generated code. But the speed of AI-generated code breaks our confidence.

TL;DR:

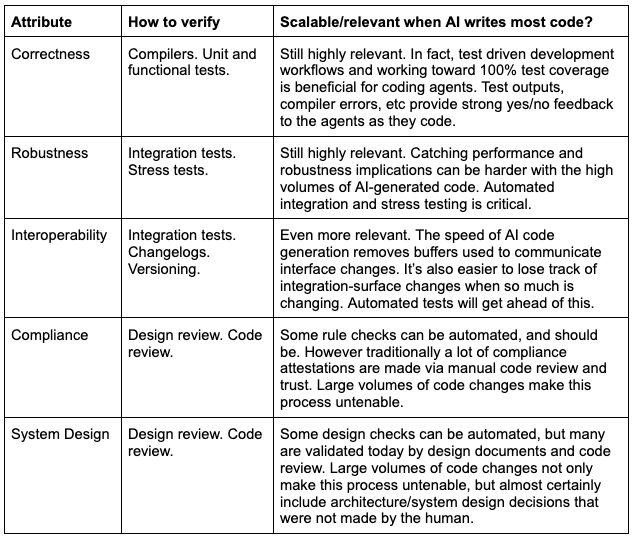

- Correctness, robustness, and interoperability verification practices scale to AI-generated development workflows

- Compliance, architecture, and system design verification practices do not

- System-level state management is what’s needed to close the gap

Building trust in our software

When building and shipping code, we want to be confident that it does what we say it does. To build that confidence, we want to make sure that…

- It’s correct. Does the code do what it’s supposed to do?

- It’s robust. Will this code break under pressure?

- It’s interoperable. Are downstream/upstream systems able to rely on it?

- It’s compliant. Does the current state of the system meet the requirements and constraints we have?

- It’s architecturally consistent. Does the current state of the system match the expected system state?

How do we “make sure” of each of these things? How does verification work when AI generates most of our code?

Compliance and architecture/system design are not quite like the others. Code changes that pass automated tests may not necessarily match expected system attributes. The buzzy term for this now is “semantics”… the semantic attributes of the system will not be verified via unit tests.

For example, there might be a principle that payment data never flows through a certain service. The code could be correctly written, pass all the tests, integrate as expected, but not meet this constraint. Traditionally, items like this are maintained because developers know the principles when they’re writing and reviewing code.

These same processes are just not going to result in 100% architectural and constraint compliance when manually reviewing the massive amounts of code brought in by coding agents. Even worse, engineers do not have the same amount of time to digest their mental models while software gets built… loss of deep understanding makes debugging harder, makes attesting harder, and even makes designing new features harder.

Over time, the gap between “what the system does” and “what anyone thinks it does” grows.

What do we do about this?

Verification of correctness, robustness, and interoperability can scale to AI-speed code generation, and should be formalized in your processes. Use test driven development. Make sure stress tests are a part of your delivery process. Integration tests are a must.

Verification of compliance and system design rely on processes that don’t scale. Instead of trying to fit a square peg into a round hole (doing manual review on a zillion lines of code), the state of the system needs to be declared and checked automatically. Constraints need to be declared and checked automatically. The same way we went from manual management of infrastructure to Terraform managing the state of infrastructure via code, we need state management of software in order for system-level verification to scale to AI-speed.

The path forward

AI didn’t break software development. It revealed which parts of our verification systems were scalable and which ones were held together by human context.

The teams that thrive in AI-assisted development won’t be the ones who add more human oversight to compensate. They’ll be the ones who make verification explicit… treating system state, architectural intent, and constraint compliance as things the system itself knows and checks.

Time for the semantic era of software verification. Time for gjalla (yah-lah).